Google DeepMind is opening up access to Project Genie, its AI tool for creating interactive game worlds from text prompts or images.

Starting Thursday, Google AI Ultra subscribers in the US can play with the experimental research prototype, powered by a combination of Google’s latest global model. Genie 3its imaging model Nano Banana Pro, and Gemini.

Coming five months after the Genie 3 research preview, the move is part of a broader push to collect user feedback and training data as DeepMind races to create more capable real-world models.

World models are AI systems that generate an internal representation of an environment, and can be used to predict future outcomes and plan actions. Many AI leaders, including those at DeepMind, believe that world models are an important step towards achieving artificial general intelligence (AGI). But in the nearer term, labs like DeepMind envision a plan to go to market starting with video games and other forms of entertainment and branches of training agents (aka robots) in simulation.

DeepMind’s release of Project Genie comes as the global modeling race begins to heat up. Fei-Fei Li’s World Labs late last year released it first commercial product called Marble. Runway, the AI video generation startup, has one too launched a model in the world recently. And former Meta chief scientist Yann LeCun’s startup AMI Labs will also focus on developing global models.

“I think it’s exciting to be in a place where we have a lot of people who can access it and give us feedback,” Shlomi Fruchter, a director of research at DeepMind, told TechCrunch in a video interview, smiling ear to ear with the clear excitement of the release of Project Genie.

DeepMind researchers who spoke to TechCrunch were upfront about the experimental nature of the tool. It can be inconsistent, sometimes creating amazing game worlds, other times producing confusing results that are off the mark. Here’s how it works.

Techcrunch event

Boston, MA

|

June 23, 2026

You start with a “world sketch” by providing text prompts for the environment and a main character, which you can later manipulate in the world in first or third person view. Nano Banana Pro creates an image based on prompts that you can, in theory, modify before Genie uses the image as a jumping off point for an interactive world. The changes mostly work, but the model sometimes stumbles and gives you purple hair when you ask for green.

You can also use real life photos as a baseline for model building a world, which, again, is hit or miss. (More on that later.)

Once you are satisfied with the image, it will take a few seconds for Project Genie to create an explorable world. You can also remix existing worlds into new interpretations by building on top of their prompts, or explore curated worlds in the gallery or through the randomizer tool for inspiration. You can download videos of the world you are exploring.

DeepMind has only provided 60 seconds of world generation and navigation so far, in part due to budget and computing constraints. Because Genie 3 an auto-regressive modelit requires a lot of dedicated computing – which puts a tight ceiling on how much DeepMind can deliver to users.

“The reason we’re limiting it to 60 seconds is because we want to bring it to more users,” Fruchter said. “Basically when you use it, there’s a chip somewhere that’s all yours and it’s dedicated to your session.”

He added that extending it beyond 60 seconds would reduce the additional cost of the test.

“The environments are interesting, but at a certain point, because of their level of interaction and the dynamics of the environment are quite limited. However, we see that as a limitation that we hope to improve further.”

Humor works, realism doesn’t

When I used the model, the safety guardrails were up and running. I cannot create anything resembling nudity, nor can I create worlds that even remotely sniff Disney or other copyrighted material. (In December, Disney hits Google with a cease-and-desistaccusing the company’s AI models of copyright infringement by training Disney characters and IP and creating unauthorized content, among other things.) I can’t even get Genie to create worlds of mermaids exploring undersea fantasy lands or ice queens in their winter castles.

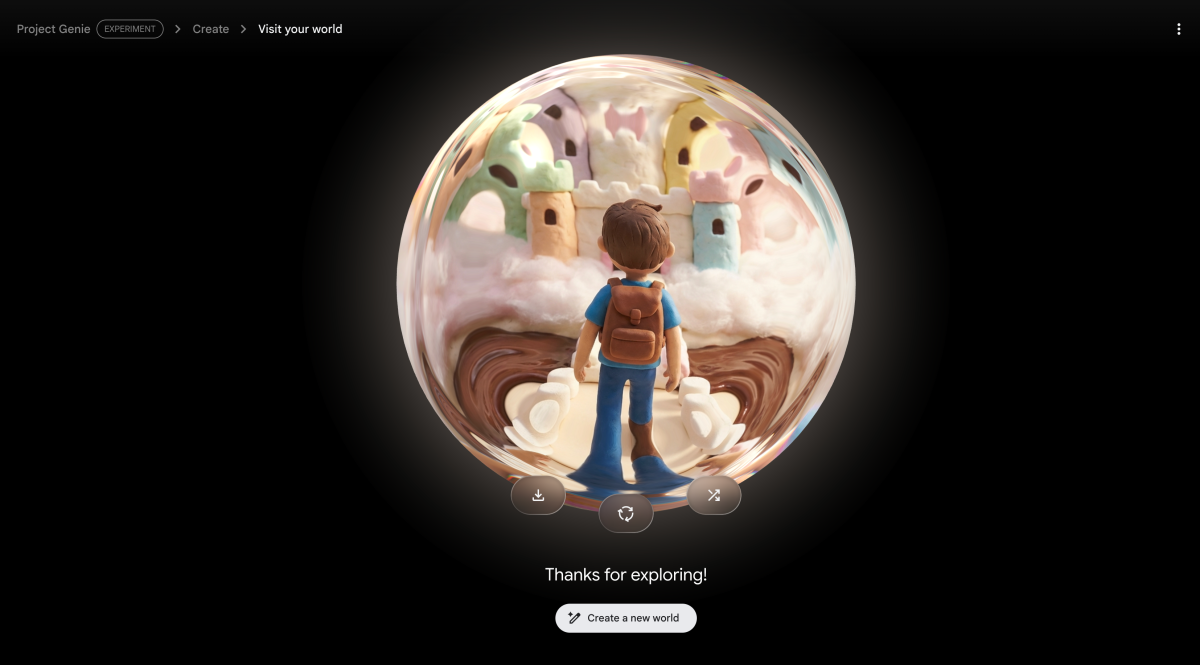

However, the demo was very impressive. The first world I built was an attempt to live out a little childhood fantasy, where I could explore a castle in the clouds made up of marshmallows with a stream of chocolate sauce and trees made of candy. (Yes, I’m a chubby kid.) I asked the model to do it in claymation style, and it gave a strange world that I ate in my childhood, the pastel-and-white colored spires and turrets of the castle that look swollen and tasty enough to take a chunk and throw it in the chocolate moat. (Video above.)

That said, Project Genie still has some kinks to work out.

Models excel at creating worlds based on artistic prompts, such as the use of watercolors, anime style or classic cartoon aesthetics. But it tends to fail when it comes to photorealistic or cinematic worlds, often coming out looking more like a video game than real people in a real setting.

It also doesn’t always respond when given real photos to use. When I gave it a photo of my office and asked it to create a world based on the photo exactly as it is, it gave me a world with some of the same furniture in my office – a wooden table, plants, a gray couch – positioned differently. And it looks sterile, digital, not life-like.

When I fed it a photo of my desk with a stuffed toy, Project Genie animated the toy as it navigated through space, and even other objects sometimes reacted as it passed them.

That interactivity is something that DeepMind is improving. There were many times when my characters walked straight into walls or other solid objects.

When DeepMind released Genie 3 initially, the researchers emphasized how the model’s auto-regressive architecture meant it could remember what it had done, so I wanted to test that by going back to parts of the environment it had done to see if it was the same. For the most part, the model worked. In one case, I created a cat exploring another table, and only once when I returned to the right side of the table did the model create a second mug.

The part that I find most frustrating is the way you navigate in space using the arrows to look around, the spacebar to jump or climb, and the WASD keys to move. I’m not a gamer, so it doesn’t come naturally to me, but the keys often don’t respond, or they send you in the wrong direction. Trying to walk from one side of the room to a door on the other side often becomes a messy zigzagging exercise, like trying to steer a shopping cart with a flat tire.

Fruchter assured me that his team was aware of these shortcomings, reminding me again that Project Genie was an experimental prototype. In the future, he said, the team hopes to improve realism and improve interaction capabilities, including giving users more control over actions and environments.

“We didn’t think of (Project Genie) as an end-to-end product that people could come back to every day, but we thought of having a glimpse of something interesting and unique and not possible otherwise,” he said.