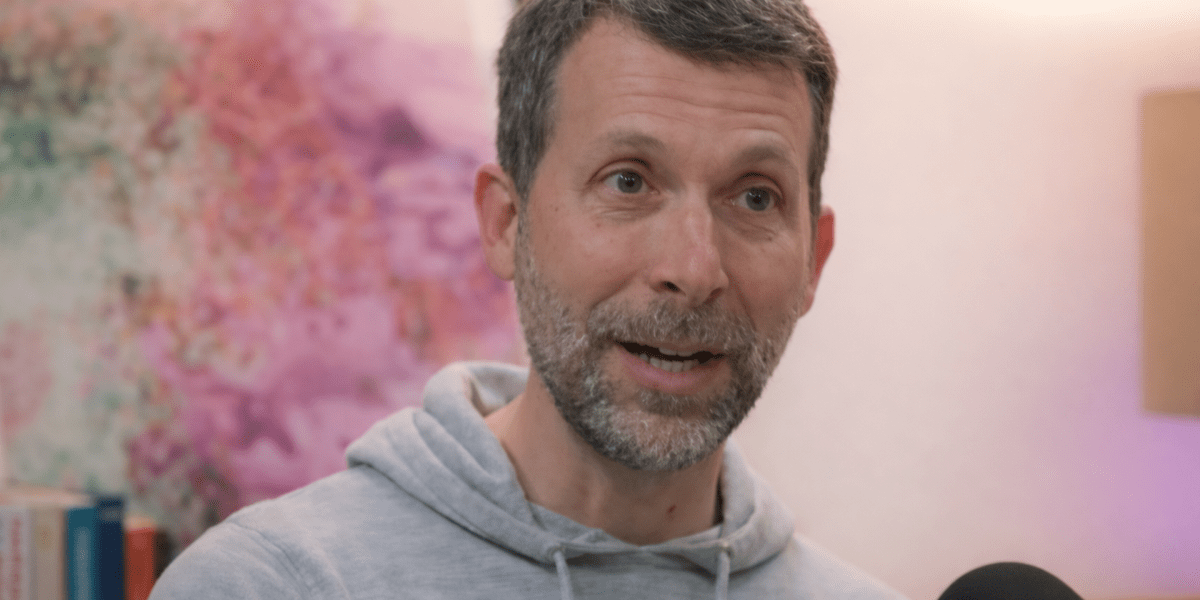

David Silver, a well-known Google DeepMind researcher who played a critical role in many of the company’s most famous successes, left the company to form his own startup.

Silver is launching a new startup called Ineffable Intelligence, based in London, according to a person with direct knowledge of Silver’s plans. The company is actively recruiting AI researchers and seeking venture capital funding, the person said.

Google DeepMind notified staff of Silver’s departure earlier this month, the person said. Silver was on sabbatical in the months leading up to his departure and has not formally returned to his role at DeepMind.

A Google DeepMind spokesperson confirmed Silver’s departure in an emailed statement to luck. “Dave’s contributions have been invaluable and we are grateful for the impact he has made on our work at Google DeepMind,” the spokesperson said.

Silver could not immediately be reached for comment.

Ineffable Intelligence was formed in November 2025 and Silver was appointed director of the company on January 16, according to documents filed with UK business registry Companies House.

Additionally, Silver’s personal webpage now lists his contact as Ineffable Intelligence and provides an ineffable intelligence email address, though it continues to say that he “leads the reinforcement learning team” at Google DeepMind.

In addition to his work at Google DeepMind, Silver is a professor at University College London. He continues to maintain that partnership.

A key figure behind many of DeepMind’s successes

Silver was one of DeepMind’s first employees when the company was founded in 2010. He knew DeepMind cofounder Demis Hassabis from university. Silver has played a key role in many of the company’s early advances, including the 2016 landmark achieved by AlphaGoshowing that an AI program can beat the world’s best players in the ancient strategy game Go.

He is also a key member of the development team AlphaStaran AI program that can beat the world’s best human players in the complex video game Starcraft 2, AlphaZero, that can play chess and shogi as well as Go at a superhuman level, and At Zerowho can master many different types of games better than people even if they start with no knowledge of the game, including no knowledge of the rules of the games.

More recently, he has worked with the DeepMind team creating AlphaProofan AI system that can successfully answer questions from the International Mathematics Olympiad. He is also one of the authors of the 2023 research paper that debuted the original Gemini family of Google AI models. Gemini now has the leading commercial AI product and Google brand.

Finding a path to AI ‘superintelligence’

Siliver has told friends he wants to return to “the wonder and wonder of solving AI’s toughest problems” and sees superintelligence—or AI smarter than any human and potentially smarter than all of humanity—as the biggest unsolved challenge in the field, according to a person familiar with his thinking.

Many other prominent AI researchers have also left established AI labs in recent years to found startups dedicated to advancing superintelligence. Ilya Sutskever, the former chief scientist of OpenAI, founded a company called Safe Superintelligence (SSI) in 2024. That company raised $3 billion in venture capital funding to date and is reportedly worth as much as $30 billion. Some of Silver’s colleagues who worked on AlphaGo, AlphaZero, and MuZero also recently left to found Reflection AI, an AI startup that also says it’s pursuing superintelligence. Meanwhile, Meta last year reorganized AI efforts around a new “Superintelligence Labs” led by former Scale AI CEO and founder Alexandr Wang.

More than language models

Silver is known for his work on reinforcement learning, a way to train AI models from experience rather than historical data. In reinforcement learning, a model performs an action, usually in a game or simulator, and then receives feedback on whether those actions are effective in helping it achieve a goal. Through trial and error over the course of several actions, the AI learns the best way to accomplish the goal.

The researcher has always been considered one of the most dogmatic proponents of reinforcement learning, arguing that this is the only way to create artificial intelligence that will one day surpass human knowledge.

In a podcast produced by Google DeepMind released in April, he said that large-scale language models (LLM), the type of AI responsible for much of the recent excitement about AI, are powerful, but they are also limited by human knowledge. “We want to go beyond what people know and to do that we need a different kind of approach and that kind of approach will require our AIs to actually figure things out for themselves and to discover new things that people don’t know,” he said. He called for a new “era of experience” in AI based on reinforcement learning.

Currently, LLMs have a “pretraining” development phase that uses what is called unsupervised learning. They take large amounts of text and learn to predict which words are statistically more likely to follow which other words in a given context. They already have a “post-training” development phase that uses reinforcement learning, usually with human evaluators looking at the model’s outputs and giving the AI feedback, sometimes just in the form of a thumbs up or thumbs down. Through this feedback, the model’s tendency to produce helpful outputs is increased.

But this type of training ultimately relies on what people know—both because it depends on what people have learned and written before in the pre-training phase and because the LLM post-training method makes reinforcement learning ultimately based on the person’s preferences. In some cases, however, human intuition can be wrong or short-sighted.

For example, famously, in step 37 of AlphaGo’s second game in the 2016 match against Go world champion Lee Sedol, AlphaGo made a move so unusual that all the human experts commenting on the game were sure it was a mistake. But this later proved to be a key to AlphaGo winning the game. Similarly, human chess players often describe the way AlphaZero plays chess as “foreign” – and yet its counterintuitive moves often prove to be good.

If human evaluators judge such actions regardless of the type of reinforcement learning process used in LLM post-training, they may give such actions “thumbs down” because they see human experts as mistakes. This is why reinforcement learning purists like Silver say that to reach superintelligence, AI not only needs to exceed human knowledge, it needs to reject it and learn to achieve goals from scratch, working from first principles.

Silver said the Ineffable Intelligence would seek to build “an endlessly learning superintelligence that can discover for itself the foundations of all knowledge,” the person familiar with his thinking said.