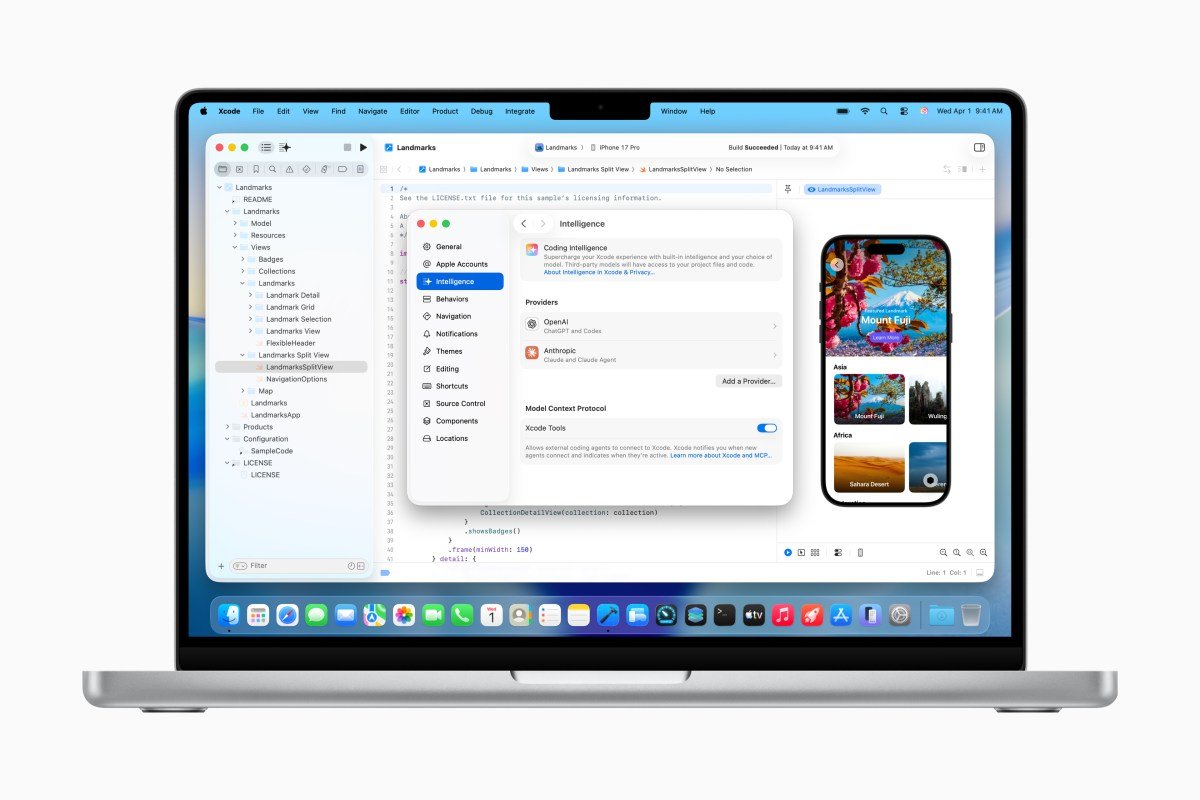

Apple brings agent coding to Xcode. On Tuesday, the company Office has partnered the release of Xcode 26.3, which will allow developers to use agent tools, including Anthropic’s Claude Agent and OpenAI codexdirectly in Apple’s official app development suite.

Xcode 26.3 Release Candidate is available to all Apple Developers now from the developer website and will hit the App Store later.

This latest update comes on the heels of Xcode 26 released last yearwhich first introduced support for ChatGPT and Claude within Apple’s integrated development environment (IDE) used by those building apps for iPhone, iPad, Mac, Apple Watch, and other Apple hardware platforms.

The integration of agent coding tools allows AI models to tap into many parts of Xcode to perform their tasks and enable more complex automation.

Modelers also have access to Apple’s current developer documentation to ensure they’re using the latest APIs and following best practices as they build.

At launch, agents help developers explore their project, understand its structure and metadata, then build the project and run tests to see if there are errors and fix them, if so.

To prepare for this launch, Apple said it worked closely with Anthropic and OpenAI to design the new experience. Specifically, the company says it is doing a lot of work to optimize token usage and tool calling, so that agents work effectively with Xcode.

Xcode uses MCP (Model Context Protocol) to expose its capabilities to agents and connect them to its tools. That means Xcode can now work with any external MCP-compatible agent for things like project discovery, changes, file management, previews and snippets, and access to the latest documentation.

Developers who want to try out the agentic coding feature must first download the agents they want to use from Xcode settings. They can also connect their accounts with AI providers by signing in or adding their API key. A drop-down menu within the app allows developers to choose which version of the model they want to use (eg GPT-5.2-codex vs. GPT-5.1-mini).

In a prompt box on the left side of the screen, developers can tell the agent what kind of project they want to build or modify the code they want to create using natural language commands. For example, they can direct Xcode to add a component to their app that uses one of Apple’s provided frameworks, and how it should look and work.

As the agent starts working, it breaks down tasks into small steps, so it’s easy to see what’s going on and how the code is changing. It will also find the documentation it needs before it starts coding. Changes are highlighted visibly within the code, and the project transcript on the side of the screen lets developers know what’s going on under the hood.

This transparency will help especially new developers who are learning to code, Apple believes. For that purpose, the company hosts a “code-along” workshop on Thursday on its developer site, where users can watch and learn how to use agentic coding tools as they code in real-time with their own copy of Xcode.

At the end of its process, the AI agent verifies that the code it produced works as expected. Armed with the results of its front-end tests, the agent can revise the project if necessary to fix errors or other problems. (Apple notes that asking the agent to think about its plans before writing code sometimes helps improve the process, because it forces the agent to do some planning first.)

In addition, if developers are not happy with the results, they can easily return their code back to its original at any point in time, because Xcode creates milestones every time the agent makes a change.