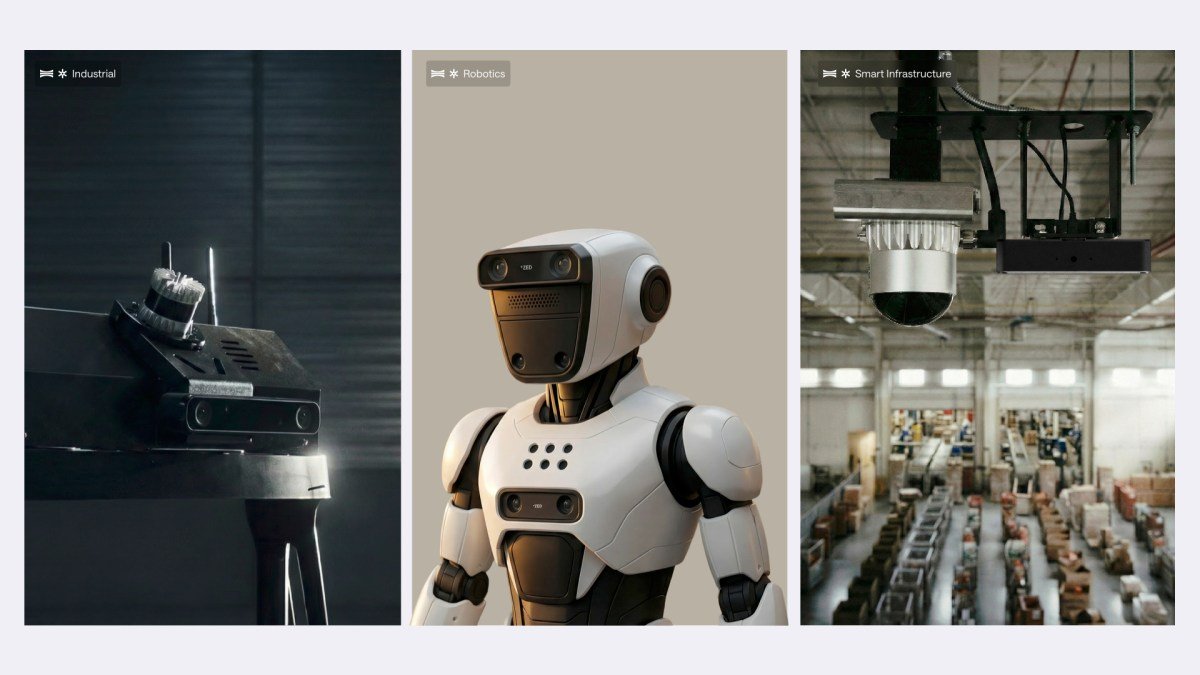

A group of researchers led by Nvidia has been released DreamDojoa new AI system designed to teach robots how to interact with the physical world by watching tens of thousands of hours of human video – a development that could dramatically reduce the time and cost needed to train the next generation of humanoid machines.

the RESEARCH REVEALSpublished this month and involving collaborators from UC Berkeley, StanfordTHE University of Texas at Austinand other institutions, indicate what the group is called "the world’s first robot model of its kind to show strong generalization to different objects and environments after post-training."

At the core of DreamDojo is what the researchers described "a large video data" Comprising "44k hours of different people egocentric video, the largest dataset to date for the world model pretraining." The dataset, called DreamDojo-HVa dramatic leap in scale – "15x longer duration, 96x more skill, and 2,000x more scenes than the previous largest dataset for model training in the world," according to the project documentation.

Inside a two-phase training system that teaches robots to see like humans

The system operates in two distinct phases. First, DreamDojo "obtains comprehensive physical knowledge from large-scale human data by pre-training with hidden actions." Then it passes "post-training the target embodiment with continuous robot actions" – actually learning general physics from watching humans, then fine-tuning that knowledge for specific robot hardware.

For businesses considering humanoid robots, this approach addresses a stubborn bottleneck. Teaching a robot to manipulate objects in unorganized environments has traditionally required large amounts of robot-specific demonstration data – expensive and time-consuming to collect. DreamDojo This problem is sidestepped by using existing human video, which allows robots to learn from observation before touching a physical object.

One of the technical achievements is speed. Through the process of distillation, the researchers achieved "real-time interactions at 10 FPS for over 1 minute" – a capability that enables practical applications such as live teleoperation and on-the-fly planning. The team has demonstrated the system works on multiple robot platforms, including GR-1, G1, AgiBotand YAM humanoid robots, which show what they call "realistic action-condition rollouts" ACROSS "a wide range of environments and object interactions."

Why Nvidia is betting big on robotics as AI infrastructure spending soars

The release comes at a key moment for Nvidia’s robotics ambitions – and for the wider AI industry. on World Economic Forum in Davos last month, CEO Jensen Huang stated that AI robotics represents a "once-in-a-generation" opportunity, especially in regions with strong manufacturing bases. According to DigitimesHuang also stated that the next decade will be "a critical period of rapid development for robotics technology."

The financial stakes are huge. Huang told CNBC’s "Halftime Report" on February 6 that capital expenditures in the tech industry – could reach $ 660 billion this year from the big hyperscalers – "reasonable, appropriate and sustainable." He described the current moment as "the largest infrastructure construction in human history," with companies like Meta, Amazon, Google, and Microsoft dramatically increasing their spending on AI.

The infrastructure push is already changing the robotics landscape. Robotics startups are raising a record $26.5 billion by 2025according to data from Dealroom. European industry giants including Siemens, Mercedes-Benzand Volvo announced robotics partnerships last year, while Tesla CEO Elon Musk claimed that 80 percent the future value of his company will come from his Optimus humanoid robots.

How DreamDojo is changing robot deployment and testing in the business

For technical decision makers evaluating humanoid robots, DreamDojo’s most immediate value may lie in its simulation capabilities. Researchers highlight downstream applications including "reliable policy evaluation without real-world deployment and model-based planning for test-time improvement" – capabilities that allow companies to simulate robot behavior before performing expensive physical tests.

This is important because the gap between laboratory demonstrations and factory floors remains significant. A robot that functions flawlessly in controlled conditions often struggles with unpredictable differences in real-world environments – different lights, unfamiliar objects, unexpected obstacles. By training on 44,000 hours of diverse human video spanning thousands of scenes and nearly 100 different skills, DreamDojo aims to create the kind of general physical intuition that makes robots adaptable rather than brittle.

The research team, led by Linxi "Jim" Fan, Joel Jang, and Yuke Zhu, with Shenyuan Gao and William Liang as co-first authors, indicated that the code will be released to the public, although the timeline was not specified.

The bigger picture: Nvidia’s transformation from gaming giant to robotics powerhouse

Although DreamDojo translated into commercial robotics products remains to be seen. But the research signals where Nvidia’s ambitions are headed as the company increasingly positions itself beyond its gaming roots. as Kyle Barr looks at Gizmodo earlier this month, Nvidia is now watching "anything related to gaming and the ‘personal computer’" As "outliers in Nvidia’s quarterly spreadsheets."

The move represents a calculated bet: that the future of computing is physical, not just digital. Nvidia has already invested $10 billion in Anthropic and signaled plans to invest heavily in OpenAI’s next round of funding. DreamDojo suggests that the company sees humanoid robots as the next frontier where AI expertise and chip dominance can converge.

Currently, the 44,000 hours of human video at the heart of DreamDojo represent something more fundamental than a technical benchmark. It represents a theory – that robots can learn to navigate our world by watching us live in it. Machines, it turns out, take notes.